1. Overview

In this article, we’re going to look at Sudoku puzzle and algorithms used for solving it.

Next, we’ll implement solutions in Java. The first solution will be a simple brute-force attack. The second will utilize the Dancing Links technique.

Let’s keep in mind that the focus we’re going to focus on the algorithms and not on the OOP design.

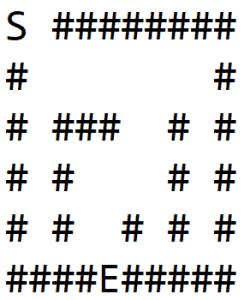

2. The Sudoku Puzzle

Simply put, Sudoku is a combinatorial number placement puzzle with 9 x 9 cell grid partially filled in with numbers from 1 to 9. The goal is to fill remaining, blank fields with the rest of numbers so that each row and column will have only one number of each kind.

What’s more, every 3 x 3 subsection of the grid can’t have any number duplicated as well. The level of difficulty naturally rises with the number of blank fields in each board.

2.1. Test Board

To make our solution more interesting and to validate the algorithm, we’re going to use the “world’s hardest sudoku” board, which is:

8 . . . . . . . .

. . 3 6 . . . . .

. 7 . . 9 . 2 . .

. 5 . . . 7 . . .

. . . . 4 5 7 . .

. . . 1 . . . 3 .

. . 1 . . . . 6 8

. . 8 5 . . . 1 .

. 9 . . . . 4 . .

2.2. Solved Board

And, to spoil the solution quickly – the correctly solved puzzle will give us the following result:

8 1 2 7 5 3 6 4 9

9 4 3 6 8 2 1 7 5

6 7 5 4 9 1 2 8 3

1 5 4 2 3 7 8 9 6

3 6 9 8 4 5 7 2 1

2 8 7 1 6 9 5 3 4

5 2 1 9 7 4 3 6 8

4 3 8 5 2 6 9 1 7

7 9 6 3 1 8 4 5 2

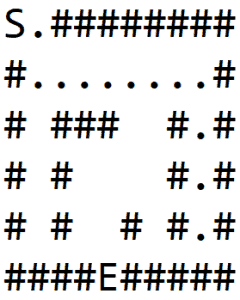

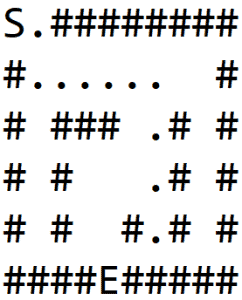

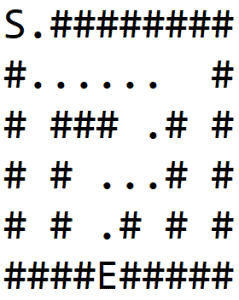

3. Backtracking Algorithm

3.1. Introduction

Backtracking algorithm tries to solve the puzzle by testing each cell for a valid solution.

If there’s no violation of constraints, the algorithm moves to the next cell, fills in all potential solutions and repeats all checks.

If there’s a violation, then it increments the cell value. Once, the value of the cell reaches 9, and there is still violation then the algorithm moves back to the previous cell and increases the value of that cell.

It tries all possible solutions.

3.2. Solution

First of all, let’s define our board as a two-dimensional array of integers. We will use 0 as our empty cell.

int[][] board = {

{ 8, 0, 0, 0, 0, 0, 0, 0, 0 },

{ 0, 0, 3, 6, 0, 0, 0, 0, 0 },

{ 0, 7, 0, 0, 9, 0, 2, 0, 0 },

{ 0, 5, 0, 0, 0, 7, 0, 0, 0 },

{ 0, 0, 0, 0, 4, 5, 7, 0, 0 },

{ 0, 0, 0, 1, 0, 0, 0, 3, 0 },

{ 0, 0, 1, 0, 0, 0, 0, 6, 8 },

{ 0, 0, 8, 5, 0, 0, 0, 1, 0 },

{ 0, 9, 0, 0, 0, 0, 4, 0, 0 }

};

Let’s create a solve() method that takes the board as the input parameter and iterates through rows, columns and values testing each cell for a valid solution:

private boolean solve(int[][] board) {

for (int row = BOARD_START_INDEX; row < BOARD_SIZE; row++) {

for (int column = BOARD_START_INDEX; column < BOARD_SIZE; column++) {

if (board[row][column] == NO_VALUE) {

for (int k = MIN_VALUE; k <= MAX_VALUE; k++) {

board[row][column] = k;

if (isValid(board, row, column) && solve(board)) {

return true;

}

board[row][column] = NO_VALUE;

}

return false;

}

}

}

return true;

}

Another method that we needed is isValid() method, which is going to check Sudoku constraints, i.e., check if the row, column, and 3 x 3 grid are valid:

private boolean isValid(int[][] board, int row, int column) {

return (rowConstraint(board, row)

&& columnConstraint(board, column)

&& subsectionConstraint(board, row, column));

}

These three checks are relatively similar. First, let’s start with row checks:

private boolean rowConstraint(int[][] board, int row) {

boolean[] constraint = new boolean[BOARD_SIZE];

return IntStream.range(BOARD_START_INDEX, BOARD_SIZE)

.allMatch(column -> checkConstraint(board, row, constraint, column));

}

Next, we use almost identical code to validate column:

private boolean columnConstraint(int[][] board, int column) {

boolean[] constraint = new boolean[BOARD_SIZE];

return IntStream.range(BOARD_START_INDEX, BOARD_SIZE)

.allMatch(row -> checkConstraint(board, row, constraint, column));

}

Furthermore, we need to validate 3 x 3 subsection:

private boolean subsectionConstraint(int[][] board, int row, int column) {

boolean[] constraint = new boolean[BOARD_SIZE];

int subsectionRowStart = (row / SUBSECTION_SIZE) * SUBSECTION_SIZE;

int subsectionRowEnd = subsectionRowStart + SUBSECTION_SIZE;

int subsectionColumnStart = (column / SUBSECTION_SIZE) * SUBSECTION_SIZE;

int subsectionColumnEnd = subsectionColumnStart + SUBSECTION_SIZE;

for (int r = subsectionRowStart; r < subsectionRowEnd; r++) {

for (int c = subsectionColumnStart; c < subsectionColumnEnd; c++) {

if (!checkConstraint(board, r, constraint, c)) return false;

}

}

return true;

}

Finally, we need a checkConstraint() method:

boolean checkConstraint(

int[][] board,

int row,

boolean[] constraint,

int column) {

if (board[row][column] != NO_VALUE) {

if (!constraint[board[row][column] - 1]) {

constraint[board[row][column] - 1] = true;

} else {

return false;

}

}

return true;

}

Once, all that is done isValid() method can simply return true.

We’re almost ready to test the solution now. The algorithm is done. However, it returns true or false only.

Therefore, to visually check the board we need just to print out the result. Apparently, this isn’t part of the algorithm.

private void printBoard() {

for (int row = BOARD_START_INDEX; row < BOARD_SIZE; row++) {

for (int column = BOARD_START_INDEX; column < BOARD_SIZE; column++) {

System.out.print(board[row][column] + " ");

}

System.out.println();

}

}

We’ve successfully implemented backtracking algorithm that solves the Sudoku puzzle!

Obviously, there’s room for improvements as the algorithm naively checks each possible combinations over and over again (even though we know the particular solution is invalid).

4. Dancing Links

4.1. Exact Cover

Let’s look at another solution. Sudoku can be described as an Exact Cover problem, which can be represented by incidence matrix showing the relationship between two objects.

For example, if we take numbers from 1 to 7 and the collection of sets S = {A, B, C, D, E, F}, where:

- A = {1, 4, 7}

- B = {1, 4}

- C = {4, 5, 7}

- D = {3, 5, 6}

- E = {2, 3, 6, 7}

- F = {2, 7}

Our goal is to select such subsets that each number is there only once and none is repeated, hence the name.

We can represent the problem using a matrix, where columns are numbers and rows are sets:

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

A | 1 | 0 | 0 | 1 | 0 | 0 | 1 |

B | 1 | 0 | 0 | 1 | 0 | 0 | 0 |

C | 0 | 0 | 0 | 1 | 1 | 0 | 1 |

D | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

E | 0 | 1 | 1 | 0 | 0 | 1 | 1 |

F | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

Subcollection S* = {B, D, F} is exact cover:

| 1 | 2 | 3 | 4 | 5 | 6 | 7 |

B | 1 | 0 | 0 | 1 | 0 | 0 | 0 |

D | 0 | 0 | 1 | 0 | 1 | 1 | 0 |

F | 0 | 1 | 0 | 0 | 0 | 0 | 1 |

Each column has exactly one 1 in all selected rows.

4.2. Algorithm X

Algorithm X is a “trial-and-error approach” to find all solutions to the exact cover problem, i.e. starting with our example collection S = {A, B, C, D, E, F}, find subcollection S* = {B, D, F}.

Algorithm X works as follows:

- If the matrix A has no columns, the current partial solution is a valid solution;

terminate successfully, otherwise, choose a column c (deterministically)

- Choose a row r such that Ar, c = 1 (nondeterministically, i.e., try all possibilities)

- Include row r in the partial solution

- For each column j such that Ar, j = 1, for each row i such that Ai, j = 1,

delete row i from matrix A and delete column j from matrix A

- Repeat this algorithm recursively on the reduced matrix A

An efficient implementation of the Algorithm X is Dancing Links algorithm (DLX for short) suggested by Dr. Donald Knuth.

The following solution has been heavily inspired by this Java implementation.

4.3. Exact Cover Problem

First, we need to create a matrix that will represent Sudoku puzzle as an Exact Cover problem. The matrix will have 9^3 rows, i.e., one row for every single possible position (9 rows x 9 columns) of every possible number (9 numbers).

Columns will represent the board (again 9 x 9) multiplied by the number of constraints.

We’ve already defined three constraints:

- each row will have only one number of each kind

- each column will have only one number of each kind

- each subsection will have only one number of each kind

Additionally, there is implicit fourth constraint:

- only one number can be in a cell

This gives four constraints in total and therefore 9 x 9 x 4 columns in the Exact Cover matrix:

private static int BOARD_SIZE = 9;

private static int SUBSECTION_SIZE = 3;

private static int NO_VALUE = 0;

private static int CONSTRAINTS = 4;

private static int MIN_VALUE = 1;

private static int MAX_VALUE = 9;

private static int COVER_START_INDEX = 1;

private int getIndex(int row, int column, int num) {

return (row - 1) * BOARD_SIZE * BOARD_SIZE

+ (column - 1) * BOARD_SIZE + (num - 1);

}

private boolean[][] createExactCoverBoard() {

boolean[][] coverBoard = new boolean

[BOARD_SIZE * BOARD_SIZE * MAX_VALUE]

[BOARD_SIZE * BOARD_SIZE * CONSTRAINTS];

int hBase = 0;

hBase = checkCellConstraint(coverBoard, hBase);

hBase = checkRowConstraint(coverBoard, hBase);

hBase = checkColumnConstraint(coverBoard, hBase);

checkSubsectionConstraint(coverBoard, hBase);

return coverBoard;

}

private int checkSubsectionConstraint(boolean[][] coverBoard, int hBase) {

for (int row = COVER_START_INDEX; row <= BOARD_SIZE; row += SUBSECTION_SIZE) {

for (int column = COVER_START_INDEX; column <= BOARD_SIZE; column += SUBSECTION_SIZE) {

for (int n = COVER_START_INDEX; n <= BOARD_SIZE; n++, hBase++) {

for (int rowDelta = 0; rowDelta < SUBSECTION_SIZE; rowDelta++) {

for (int columnDelta = 0; columnDelta < SUBSECTION_SIZE; columnDelta++) {

int index = getIndex(row + rowDelta, column + columnDelta, n);

coverBoard[index][hBase] = true;

}

}

}

}

}

return hBase;

}

private int checkColumnConstraint(boolean[][] coverBoard, int hBase) {

for (int column = COVER_START_INDEX; column <= BOARD_SIZE; c++) {

for (int n = COVER_START_INDEX; n <= BOARD_SIZE; n++, hBase++) {

for (int row = COVER_START_INDEX; row <= BOARD_SIZE; row++) {

int index = getIndex(row, column, n);

coverBoard[index][hBase] = true;

}

}

}

return hBase;

}

private int checkRowConstraint(boolean[][] coverBoard, int hBase) {

for (int row = COVER_START_INDEX; row <= BOARD_SIZE; r++) {

for (int n = COVER_START_INDEX; n <= BOARD_SIZE; n++, hBase++) {

for (int column = COVER_START_INDEX; column <= BOARD_SIZE; column++) {

int index = getIndex(row, column, n);

coverBoard[index][hBase] = true;

}

}

}

return hBase;

}

private int checkCellConstraint(boolean[][] coverBoard, int hBase) {

for (int row = COVER_START_INDEX; row <= BOARD_SIZE; row++) {

for (int column = COVER_START_INDEX; column <= BOARD_SIZE; column++, hBase++) {

for (int n = COVER_START_INDEX; n <= BOARD_SIZE; n++) {

int index = getIndex(row, column, n);

coverBoard[index][hBase] = true;

}

}

}

return hBase;

}

Next, we need to update the newly created board with our initial puzzle layout:

private boolean[][] initializeExactCoverBoard(int[][] board) {

boolean[][] coverBoard = createExactCoverBoard();

for (int row = COVER_START_INDEX; row <= BOARD_SIZE; row++) {

for (int column = COVER_START_INDEX; column <= BOARD_SIZE; column++) {

int n = board[row - 1][column - 1];

if (n != NO_VALUE) {

for (int num = MIN_VALUE; num <= MAX_VALUE; num++) {

if (num != n) {

Arrays.fill(coverBoard[getIndex(row, column, num)], false);

}

}

}

}

}

return coverBoard;

}

We are ready to move to the next stage now. Let’s create two classes that will link our cells together.

4.4. Dancing Node

Dancing Links algorithm operates on a basic observation that following operation on doubly linked lists of nodes:

node.prev.next = node.next

node.next.prev = node.prev

removes the node, while:

node.prev = node

node.next = node

restores the node.

Each node in DLX is linked to the node on the left, right, up and down.

DancingNode class will have all operations needed to add or remove nodes:

class DancingNode {

DancingNode L, R, U, D;

ColumnNode C;

DancingNode hookDown(DancingNode node) {

assert (this.C == node.C);

node.D = this.D;

node.D.U = node;

node.U = this;

this.D = node;

return node;

}

DancingNode hookRight(DancingNode node) {

node.R = this.R;

node.R.L = node;

node.L = this;

this.R = node;

return node;

}

void unlinkLR() {

this.L.R = this.R;

this.R.L = this.L;

}

void relinkLR() {

this.L.R = this.R.L = this;

}

void unlinkUD() {

this.U.D = this.D;

this.D.U = this.U;

}

void relinkUD() {

this.U.D = this.D.U = this;

}

DancingNode() {

L = R = U = D = this;

}

DancingNode(ColumnNode c) {

this();

C = c;

}

}

4.5. Column Node

ColumnNode class will link columns together:

class ColumnNode extends DancingNode {

int size;

String name;

ColumnNode(String n) {

super();

size = 0;

name = n;

C = this;

}

void cover() {

unlinkLR();

for (DancingNode i = this.D; i != this; i = i.D) {

for (DancingNode j = i.R; j != i; j = j.R) {

j.unlinkUD();

j.C.size--;

}

}

}

void uncover() {

for (DancingNode i = this.U; i != this; i = i.U) {

for (DancingNode j = i.L; j != i; j = j.L) {

j.C.size++;

j.relinkUD();

}

}

relinkLR();

}

}

4.6. Solver

Next, we need to create a grid consisting of our DancingNode and ColumnNode objects:

private ColumnNode makeDLXBoard(boolean[][] grid) {

int COLS = grid[0].length;

ColumnNode headerNode = new ColumnNode("header");

List<ColumnNode> columnNodes = new ArrayList<>();

for (int i = 0; i < COLS; i++) {

ColumnNode n = new ColumnNode(Integer.toString(i));

columnNodes.add(n);

headerNode = (ColumnNode) headerNode.hookRight(n);

}

headerNode = headerNode.R.C;

for (boolean[] aGrid : grid) {

DancingNode prev = null;

for (int j = 0; j < COLS; j++) {

if (aGrid[j]) {

ColumnNode col = columnNodes.get(j);

DancingNode newNode = new DancingNode(col);

if (prev == null) prev = newNode;

col.U.hookDown(newNode);

prev = prev.hookRight(newNode);

col.size++;

}

}

}

headerNode.size = COLS;

return headerNode;

}

We’ll use heuristic search to find columns and return a subset of the matrix:

private ColumnNode selectColumnNodeHeuristic() {

int min = Integer.MAX_VALUE;

ColumnNode ret = null;

for (

ColumnNode c = (ColumnNode) header.R;

c != header;

c = (ColumnNode) c.R) {

if (c.size < min) {

min = c.size;

ret = c;

}

}

return ret;

}

Finally, we can recursively search for the answer:

private void search(int k) {

if (header.R == header) {

handleSolution(answer);

} else {

ColumnNode c = selectColumnNodeHeuristic();

c.cover();

for (DancingNode r = c.D; r != c; r = r.D) {

answer.add(r);

for (DancingNode j = r.R; j != r; j = j.R) {

j.C.cover();

}

search(k + 1);

r = answer.remove(answer.size() - 1);

c = r.C;

for (DancingNode j = r.L; j != r; j = j.L) {

j.C.uncover();

}

}

c.uncover();

}

}

If there are no more columns, then we can print out the solved Sudoku board.

5. Benchmarks

We can compare those two different algorithms by running them on the same computer (this way we can avoid differences in components, the speed of CPU or RAM, etc.). The actual times will differ from computer to computer.

However, we should be able to see relative results, and this will tell us which algorithm runs faster.

The Backtracking Algorithm takes around 250ms to solve the board.

If we compare this with the Dancing Links, which takes around 50ms, we can see a clear winner. Dancing Links is around five times faster when solving this particular example.

6. Conclusion

In this tutorial, we’ve discussed two solutions to a sudoku puzzle with core Java. The backtracking algorithm, which is a brute-force algorithm, can solve the standard 9×9 puzzle easily.

The slightly more complicated Dancing Links algorithm has been discussed as well. Both solve the hardest puzzles within seconds.

Finally, as always, the code used during the discussion can be found over on GitHub.