1. Overview

In this article, we’ll learn how to handle the “Unknown magic byte” error and other deserialization issues that arise when consuming Avro messages using Spring Kafka. We’ll explore the ErrorHandlingDeserializer and see how it helps manage poison pill messages.

Finally, we’ll configure the DefaultErrorHandler along with a DeadLetterPublishingRecoverer to route problematic records to a DLQ topic, ensuring the consumer continues processing without getting stuck.

2. Poison Pills and Magic Bytes

Sometimes, we receive messages that can’t be processed due to format issues or unexpected content – these are called poison pill messages. Instead of trying to process them endlessly, we should handle these messages gracefully.

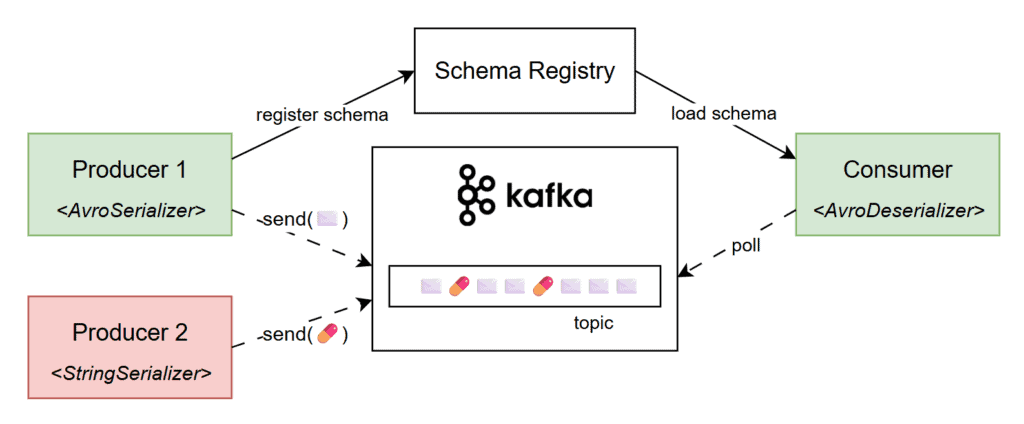

In Kafka, poison pill messages can occur when a consumer expects Avro-encoded data but receives something different. For instance, a producer using a StringSerializer might send a plain message to a topic expecting Avro-encoded data, causing the AvroDeserializer on the consumer side to fail:

Consequently, we get deserialization errors with the “Unknown magic byte” message. The “magic byte” is a marker at the start of an Avro-encoded message that helps the deserializer identify and process it correctly. If the message wasn’t serialized with an Avro serializer and it doesn’t begin with this byte, the deserializer throws an error signaling a format mismatch.

3. Reproducing the Issue

To reproduce the issue, we’ll use a simple Spring Boot application that consumes messages in Avro format from a Kafka topic. Our app will use the spring-kafka, avro, and kafka-avro-deserialzier dependencies:

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

</dependency>

<dependency>

<groupId>org.apache.avro</groupId>

<artifactId>avro</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>io.confluent</groupId>

<artifactId>kafka-avro-serializer</artifactId>

<version>7.9.1</version>

</dependency>Additionally, our service uses a @KafkaListener to listen to all messages from the “baeldung.article.published” topic. For demonstration purposes, we’ll store the article name of all incoming messages in memory, in a List:

@Component

class AvroMagicByteApp {

// logger

List<String> blog = new ArrayList<>();

@KafkaListener(topics = "baeldung.article.published")

public void listen(Article article) {

LOG.info("a new article was published: {}", article);

blog.add(article.getTitle());

}

}Next, we’ll add our Kafka-specific application properties. Since we’re using Spring Boot’s built-in Testcontainers support, we can omit the bootstrap-servers property as it’ll be injected automatically. We’ll also set schema.registry.url to “mock://test“, since we won’t be using a real schema registry during testing:

spring:

kafka:

# bootstrap-servers <-- it'll be injected in test by Spring and Testcontainers

consumer:

group-id: test-group

auto-offset-reset: earliest

key-deserializer: org.apache.kafka.common.serialization.StringDeserializer

value-deserializer: io.confluent.kafka.serializers.KafkaAvroDeserializer

properties:

schema.registry.url: mock://test

specific.avro.reader: trueThat’s it, we can now use Testcontainers to start a Docker container with a Kafka broker and test the happy path of our simple app.

However, if we publish a poison-pill message to our test topic, we’ll encounter the “Unknown magic byte!” exception. To produce the non-compliant message, we’ll leverage a KafkaTemplate instance that uses a StringSerializer and publish a dummy String to our topic:

@SpringBootTest

class AvroMagicByteLiveTest {

@Container

@ServiceConnection

static KafkaContainer kafka = new KafkaContainer(DockerImageName.parse("apache/kafka:4.0.0"));

@Test

void whenSendingMalformedMessage_thenSendToDLQ() throws Exception {

stringKafkaTemplate()

.send("baeldung.article.published", "not a valid avro message!")

.get();

Thread.sleep(10_000L);

// manually verify that the poison-pill message is handled correctly

}

private static KafkaTemplate<Object, Object> stringKafkaTemplate() { /* ... */ }

}Additionally, we’ve also temporarily added a Thread.sleep() that will help us observe the application logs. As expected, our service fails to deserialize the message, and we encounter the “Unknown magic byte!” error:

ERROR o.s.k.l.KafkaMessageListenerContainer - Consumer exception

java.lang.IllegalStateException: This error handler cannot process 'SerializationException's directly; __please consider configuring an 'ErrorHandlingDeserializer'__ in the value and/or key deserializer

at org.springframework.kafka...DefaultErrorHandler.handleOtherException(DefaultErrorHandler.java:192)

[...]

Caused by: org.apache.kafka...RecordDeserializationException:

Error deserializing VALUE for partition baeldung.article.published-0 at offset 1.

__If needed, please seek past the record to continue consumption.__

at org.apache.kafka.clients...CompletedFetch.newRecordDeserializationException(CompletedFetch.java:346)

[...]

Caused by: org.apache.kafka...errors.SerializationException: __Unknown magic byte!__

at io.confluent.kafka.serializers.AbstractKafkaSchemaSerDe.getByteBuffer(AbstractKafkaSchemaSerDe.java:649)

[...]Moreover, we’ll also see this error repeatedly because we didn’t handle it properly and never acknowledged the message. Simply put, the consumer gets stuck at that offset, continuously trying to process the malformed message.

4. Error-Handling Deserializer

Fortunately, the error log is detailed and even suggests a possible fix:

This error handler cannot process 'SerializationException's directly;

please consider configuring an 'ErrorHandlingDeserializer' in the value and/or key deserializer.An ErrorHandlingDeserializer in Spring Kafka is a wrapper that catches deserialization errors and allows our application to handle them gracefully, preventing the consumer from crashing. It works by delegating the actual deserialization to another deserializer, such as JsonDeserializer or KafkaAvroDeserializer, and capturing any exceptions thrown during that process.

To configure it, we’ll update the value-deserializer property to ErrorHandlingDeserializer. Additionally, we’ll specify the original deserializer under spring.kafka.consumer.spring.deserializer.value.delegate.class:

spring.kafka:

consumer:

value-deserializer: org.springframework.kafka.support.serializer.ErrorHandlingDeserializer

properties:

spring.deserializer.value.delegate.class: io.confluent.kafka.serializers.KafkaAvroDeserializer

With this configuration, the “Unknown magic byte!” exception appears only once in the logs. This time, the application handles the poisoned pill message gracefully and moves on without attempting to deserialize it again.

5. Publishing to DLQ

So far, we’ve configured an ErrorHandlingDeserializer for the message payload and correctly handled poison pill scenarios. However, if we simply catch exceptions and move on, it becomes difficult to inspect or recover those faulty messages. To address this, we should consider sending them to a DLQ topic.

A Dead Letter Queue (DLQ) is a special topic used to store messages that can’t be processed successfully after one or more attempts. Let’s enable this behavior in our application:

@Configuration

class DlqConfig {

@Bean

DefaultErrorHandler errorHandler(DeadLetterPublishingRecoverer dlqPublishingRecoverer) {

return new DefaultErrorHandler(dlqPublishingRecoverer);

}

@Bean

DeadLetterPublishingRecoverer dlqPublishingRecoverer(KafkaTemplate<byte[], byte[]> bytesKafkaTemplate) {

return new DeadLetterPublishingRecoverer(bytesKafkaTemplate);

}

@Bean("bytesKafkaTemplate")

KafkaTemplate<?, ?> bytesTemplate(ProducerFactory<?, ?> kafkaProducerFactory) {

return new KafkaTemplate<>(kafkaProducerFactory,

Map.of(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, ByteArraySerializer.class.getName()));

}

}As we can observe, we define a DefaultErrorHandler bean, which determines which retryable exceptions. In our case, deserialization exceptions are considered non-retryable, so they will be sent directly to the DLQ. When creating the error handler, we’ll inject a DeadLetterPublishingRecoverer instance via the constructor.

On the other hand, the dlqPublishingRecoverer forwards the failed messages to a DLQ topic using a KafkaTemplate with a ByteArraySerializer since the exact format is unknown for poison pill messages. Additionally, it’s responsible for resolving the DLQ topic name; by default, it will append “-dlt” to the original topic name:

@Test

void whenSendingMalformedMessage_thenSendToDLQ() throws Exception {

stringKafkaTemplate()

.send("baeldung.article.published", "not a valid avro message!")

.get();

var dlqRecord = listenForOneMessage("baeldung.article.published-dlt", ofSeconds(5L));

assertThat(dlqRecord.value())

.isEqualTo("not a valid avro message!");

}

private static ConsumerRecord<?, ?> listenForOneMessage(String topic, Duration timeout) {

return KafkaTestUtils.getOneRecord(

kafka.getBootstrapServers(), "test-group-id", topic, 0, false, true, timeout);

}As we can see, configuring the ErrorHandlingDeserializer allowed us to gracefully handle malformed messages. After that, the customized DefaultErrorHandler and DeadLetterPublishingRecoverer beans enabled us to push these faulty messages to a DLQ topic.

6. Conclusion

In this tutorial, we covered how to resolve the “Unknown magic byte” error and other deserialization issues that can arise when handling Avro messages with Spring Kafka. We explored how the ErrorHandlingDeserializer helps prevent consumers from being blocked by problematic messages.

Finally, we reviewed the concept of Dead Letter Queues and configured Spring Kafka beans to route poison pill messages to a dedicated DLQ topic, ensuring smooth and uninterrupted processing.

As always, the code presented in this article is available over on GitHub.

The post How to Fix Unknown Magic Byte Errors in Apache Kafka first appeared on Baeldung.