1. Introduction

Spring WebFlux provides Reactive Programming to web applications. The asynchronous and non-blocking nature of Reactive design improves performance and memory usage. Project Reactor provides those capabilities to efficiently manage data streams.

However, backpressure is a common problem in these kinds of applications. In this tutorial, we'll explain what it is and how to apply backpressure mechanism in Spring WebFlux to mitigate it.

2. Backpressure in Reactive Streams

Due to the non-blocking nature of Reactive Programming, the server doesn't send the complete stream at once. It can push the data concurrently as soon as it is available. Thus, the client waits less time to receive and process the events. But, there are issues to overcome.

Backpressure in software systems is the capability to overload the traffic communication. In other words, emitters of information overwhelm consumers with data they are not able to process.

Eventually, people also apply this term as the mechanism to control and handle it. It is the protective actions taken by systems to control downstream forces.

2.1. What Is Backpressure?

In Reactive Streams, backpressure also defines how to regulate the transmission of stream elements. In other words, control how many elements the recipient can consume.

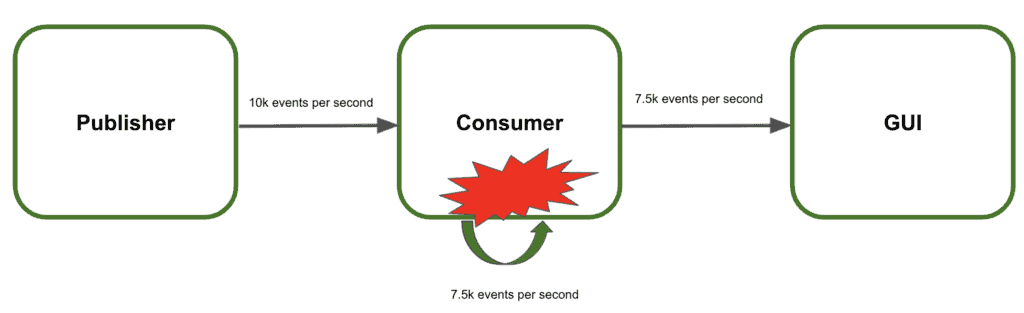

Let's use an example to clearly describe what it is:

- The system contains three services: the Publisher, the Consumer, and the Graphical User Interface (GUI)

- The Publisher sends 10000 events per second to the Consumer

- The Consumer processes them and sends the result to the GUI

- The GUI displays the results to the users

- The Consumer can only handle 7500 events per second

Image may be NSFW.

Clik here to view.

At this speed rate, the consumer cannot manage the events (backpressure). Consequently, the system would collapse and the users would not see the results.

2.2. Using Backpressure to Prevent Systemic Failures

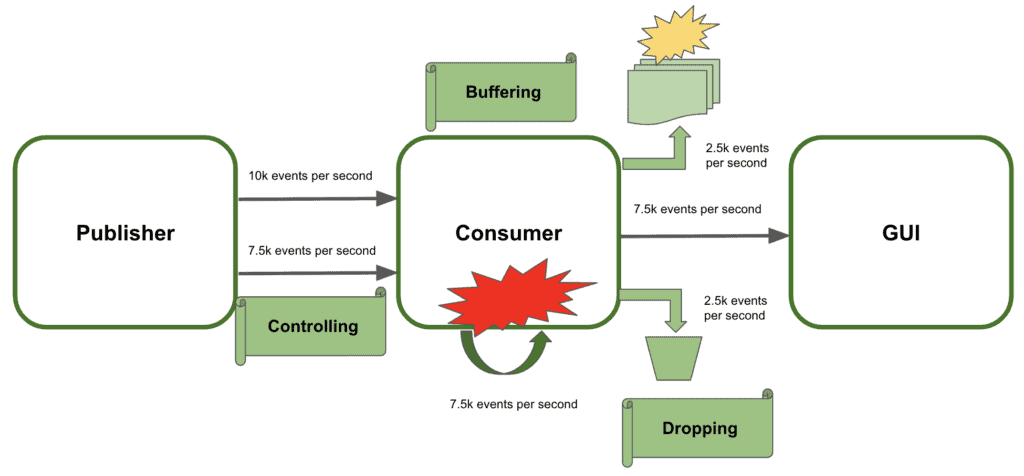

The recommendation here would be to apply some sort of backpressure strategy to prevent systemic failures. The objective is to efficiently manage the extra events received:

- Controlling the data stream sent would be the first option. Basically, the publisher needs to slow down the pace of the events. Therefore, the consumer is not overloaded. Unfortunately, this is not always possible and we would need to find other available options

- Buffering the extra amount of data is the second choice. With this approach, the consumer stores temporarily the remaining events until it can process them. The main drawback here is to unbind the buffer causing memory crashing

- Dropping the extra events losing track of them. Even this solution is far from ideal, with this technique the system would not collapse

Image may be NSFW.

Clik here to view.

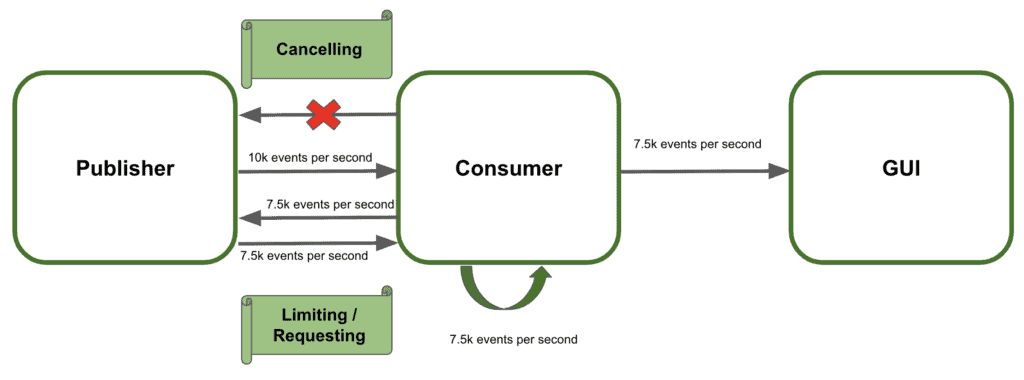

2.3. Controlling Backpressure

We'll focus on controlling the events emitted by the publisher. Basically, there are three strategies to follow:

- Send new events only when the subscriber requests them. This is a pull strategy to gather elements at the emitter request

- Limiting the number of events to receive at the client-side. Working as a limited push strategy the publisher only can send a maximum amount of items to the client at once

- Canceling the data streaming when the consumer cannot process more events. In this case, the receiver can abort the transmission at any given time and subscribe to the stream later again

Image may be NSFW.

Clik here to view.

3. Handling Backpressure in Spring WebFlux

Spring WebFlux provides an asynchronous non-blocking flow of reactive streams. The responsible for backpressure within Spring WebFlux is the Project Reactor. It internally uses Flux functionalities to apply the mechanisms to control the events produced by the emitter.

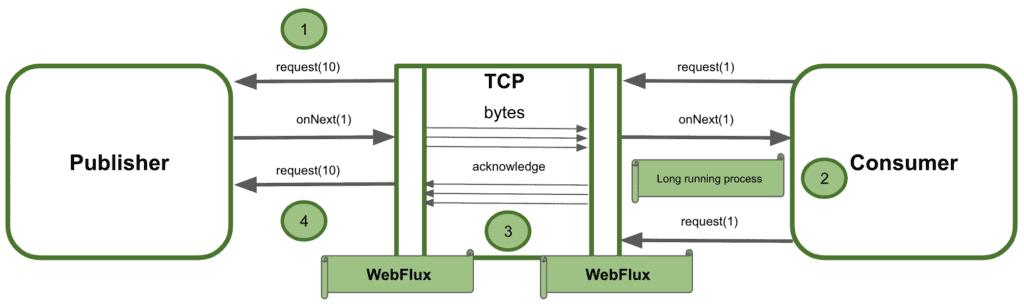

WebFlux uses TCP flow control to regulate the backpressure in bytes. But it does not handle the logical elements the consumer can receive. Let's see the interaction flow happening under the hood:

- WebFlux framework is responsible for the conversion of events to bytes in order to transfer/receive them through TCP

- It may happen that the consumer starts and long-running job before requesting the next logical element

- While the receiver is processing the events, WebFlux enqueue bytes without acknowledgment because there is no demand for new events

- Due to the nature of the TCP protocol, if there are new events the publisher will continue sending them to the network

Image may be NSFW.

Clik here to view.

In conclusion, the diagram above shows that the demand in logical elements could be different for the consumer and the publisher. Spring WebFlux does not ideally manage backpressure between the services interacting as a whole system. It handles it with the consumer independently and then with the publisher in the same way. But it is not taking into account the logical demand between the two services.

So, Spring WebFlux does not handle backpressure as we can expect. Let's see in the next section how to implement backpressure mechanism in Spring WebFlux!

4. Implementing Backpressure Mechanism with Spring WebFlux

We'll use the Flux implementation to handle the control of the events received. Therefore, we'll expose the request and response body with backpressure support on the read and the write side. Then, the producer would slow down or stop until the consumer's capacity frees up. Let's see how to do it!

4.1. Dependencies

To implement the examples, we'll simply add the Spring WebFlux starter and Reactor test dependencies to our pom.xml:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-webflux</artifactId>

</dependency>

<dependency>

<groupId>io.projectreactor</groupId>

<artifactId>reactor-test</artifactId>

<scope>test</scope>

</dependency>4.2. Request

The first option is to give the consumer control over the events it can process. Thus, the publisher waits until the receiver requests new events. In summary, the client subscribes to the Flux and then process the events based on its demand:

@Test

public void whenRequestingChunks10_thenMessagesAreReceived() {

Flux request = Flux.range(1, 50);

request.subscribe(

System.out::println,

err -> err.printStackTrace(),

() -> System.out.println("All 50 items have been successfully processed!!!"),

subscription -> {

for (int i = 0; i < 5; i++) {

System.out.println("Requesting the next 10 elements!!!");

subscription.request(10);

}

}

);

StepVerifier.create(request)

.expectSubscription()

.thenRequest(10)

.expectNext(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

.thenRequest(10)

.expectNext(11, 12, 13, 14, 15, 16, 17, 18, 19, 20)

.thenRequest(10)

.expectNext(21, 22, 23, 24, 25, 26, 27 , 28, 29 ,30)

.thenRequest(10)

.expectNext(31, 32, 33, 34, 35, 36, 37 , 38, 39 ,40)

.thenRequest(10)

.expectNext(41, 42, 43, 44, 45, 46, 47 , 48, 49 ,50)

.verifyComplete();With this approach, the emitter never overwhelms the receiver. In other words, the client is under control to process the events it needs.

We'll test the producer behavior with respect to backpressure with StepVerifier. We'll expect the next n items only when the thenRequest(n) is called.

4.3. Limit

The second option is to use the limitRange() operator from Project Reactor. It allows setting the number of items to prefetch at once. One interesting feature is that the limit applies even when the subscriber requests more events to process. The emitter splits the events into chunks avoiding consuming more than the limit on each request:

@Test

public void whenLimitRateSet_thenSplitIntoChunks() throws InterruptedException {

Flux<Integer> limit = Flux.range(1, 25);

limit.limitRate(10);

limit.subscribe(

value -> System.out.println(value),

err -> err.printStackTrace(),

() -> System.out.println("Finished!!"),

subscription -> subscription.request(15)

);

StepVerifier.create(limit)

.expectSubscription()

.thenRequest(15)

.expectNext(1, 2, 3, 4, 5, 6, 7, 8, 9, 10)

.expectNext(11, 12, 13, 14, 15)

.thenRequest(10)

.expectNext(16, 17, 18, 19, 20, 21, 22, 23, 24, 25)

.verifyComplete();

}4.4. Cancel

Finally, the consumer can cancel the events to receive at any moment. For this example, we'll use another approach. Project Reactor allows implementing our own Subscriber or extend the BaseSubscriber. So let's see how the receiver can abort the reception of new events at any time overriding the mentioned class:

@Test

public void whenCancel_thenSubscriptionFinished() {

Flux<Integer> cancel = Flux.range(1, 10).log();

cancel.subscribe(new BaseSubscriber<Integer>() {

@Override

protected void hookOnNext(Integer value) {

request(3);

System.out.println(value);

cancel();

}

});

StepVerifier.create(cancel)

.expectNext(1, 2, 3)

.thenCancel()

.verify();

}5. Conclusion

In this tutorial, we showed what backpressure is in Reactive Programming and how to avoid it. Spring WebFlux supports backpressure through Project Reactor. Therefore, it can provide availability, robustness, and stability when the publisher overwhelms the consumer with too many events. In summary, it can prevent systemic failures due to high demand.

As always, the code is available over on GitHub.

The post Backpressure Mechanism in Spring WebFlux first appeared on Baeldung.Image may be NSFW.Clik here to view.